Differential privacy is a technique with which Apple collects information about the use of iOS and macOS, without compromising privacy. Users can participate voluntarily and share their data, with Apple taking extra measures to prevent anyone from finding out who the data came from. In this guide you can read all about differential privacy, or differential privacy, as Apple calls it. Due to Apple’s stricter privacy regulations, Apple may sometimes be able to offer fewer features than competitors – simply because the company has less detailed personal information. Alternatively, Apple tries to process as much user data locally as possible so that it doesn’t leave your device.

- Where can I find differential privacy data?

- Differential privacy: not required

- What does Apple use differential privacy for?

- Collect user data

- Apple’s approach: local as much as possible

- Why does Apple add noise?

- Differential Privacy: Applications

- What’s in it for you?

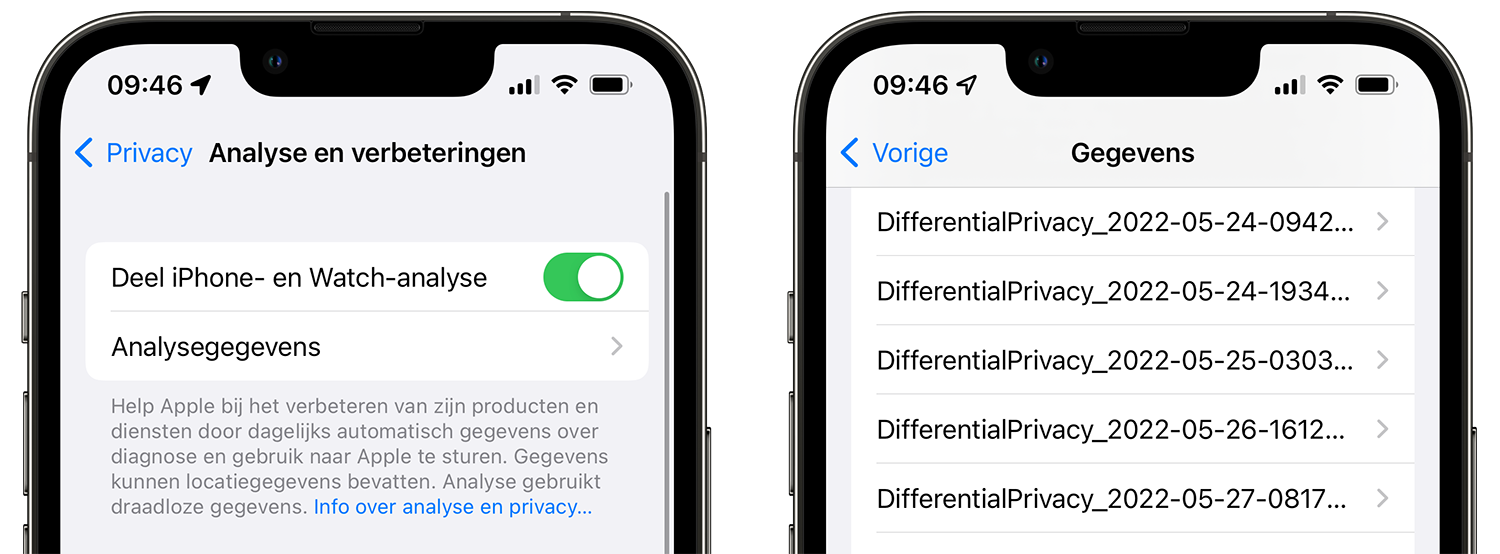

Where can I find differential privacy data?

You can find the data that Apple collects with differential privacy on your device:

- Go to the Settings app on your iPhone or iPad.

- Go to Privacy > Analytics & Improvements > Analytics Data.

- Scroll down to the items that begin with “DifferentialPrivacy” as indicated in the screenshots below.

- Optionally, you can look into these files to check whether you feel comfortable about sharing this data.

Differential privacy: not required

Differential privacy was first introduced in iOS 10 and macOS Sierra. This makes it possible to analyze user data without endangering your privacy. In short, it means that your data is ‘hidden’ between a large amount of other data using algorithms. Apple deliberately adds extra “noise” to make it impossible to trace properties or locations back to you as a person.

Participation in differential privacy is voluntary. It is disabled by default in iOS and macOS and you must consciously consent to participate.

What does Apple use differential privacy for?

For example, Apple uses differential privacy to gain insight into:

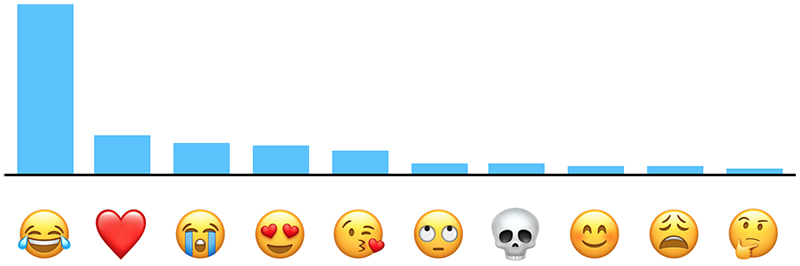

- The emoji people use so they can make better suggestions.

- Language use of people, so that they can take slang and new words into account when making suggestions.

- Giving hints when looking up words.

- Find out which websites crash frequently and consume a lot of power.

- Use of Health Data.

For example, Apple announced that the emoji that smiles with happiness is the most used emoji in the US:

Collect user data

Companies collect data for all sorts of reasons. Usually they promise that they need the data to improve their services, but it also happens that data is used to build a better profile of you or to resell the data to marketing companies. For example, Google analyzes your searches so that they can build a profile of you in order to show you suitable advertisements. Facebook uses your profile information to ensure that companies can target advertising. In return, the services of Google and Facebook are free: you pay by giving away a piece of privacy.

Apple doesn’t participate: they emphasize over and over again that they make money with the sale of products, not with customer data. Apple also promises not to build a profile of customers. Yet services like Siri are becoming more and more personal and it seems that Apple knows more and more about you. What’s up with that?

Apple’s approach: local as much as possible

Apple has developed a technique called local differential privacy, a term that is also used in the research world. This means that all operations take place locally on your device as much as possible. This reduces the chance that privacy-sensitive information is intercepted. Local differential privacy makes it more difficult to determine which user provided what information.

All intelligent operations at Apple take place locally on your device. This concerns facial recognition in the Photos app of iOS and proactive information about travel times to work. Apple does not send this information to a server. Because they are stored locally on your device, which is protected with a pin code, fingerprint or face scan, no one can access them.

You can read more about Apple’s approach in this document. Apple has also published a scientific article in the Machine Learning Journal, in which they provide more insight into their approach.

Why does Apple add noise?

If companies want to collect user data from you, they usually promise to make the data anonymous. In doing so, they remove unique data, such as home address and the combination of name and date of birth. But that is not enough: studies have regularly shown that anonymous data can be traced back to a specific person with some difficulty. Especially if someone knows your location or has access to your photos (as is the case with many apps), you can find out who it is.

Apple therefore takes a different approach. Apple already adds extra noise locally on the device (random fake data) before the data is sent to a server at Apple. So Apple does not know which data is fake or real, but if you look at large numbers and calculate averages, trends can still be discovered.

Differential Privacy: Applications

When Apple announced differential privacy in iOS 10 and macOS Sierra, they initially emphasized it would only apply to four areas:

- New words that users add to their local dictionaries.

- Emoji you type in so Apple can suggest replacement emoji.

- Deep links within apps, which are allowed to be publicly indexed.

- Searches within the Notes app.

Two examples: if many users suddenly add the word ‘Brexit’ to their autocorrect glossary, Apple can conclude that it is apparently a commonly used word. They can then add it to everyone’s glossary.

The same goes for emoji: if many people use a pizza slice emoji when they talk about pizza, Apple knows that replacing “pizza” with such an emoji is common.

Apple tries to analyze as much data locally as possible, but if they want to learn from user patterns of the massive amount of iPhone and Mac users, they will still have to send data to a server. Differential privacy ensures that your data is ‘mixed’ with deliberately added fake data. All data that iOS and macOS send to the Apple servers is, of course, encrypted.

What’s in it for you?

It may seem like differential privacy was only created to allow Apple to collect more data about you. But in the end, it is mainly the users who should benefit from it. You notice it when using iMessage, for example: this app is better at predicting words based on new slang or commonly used terms (think of the aforementioned example of ‘Brexit’, a term that did not exist before).

Spotlight also knows better which results are frequently tapped, so that popular options and functions appear higher in the list. iOS can therefore better predict what people are doing, making it more pleasant to use. Of course, enough people must participate.

As already indicated, differential privacy is not mandatory (it works with opt-in). It is disabled by default and you must give permission to participate.

The advantage for Apple is that they can access large amounts of user data, without it being traceable to individual users. If a government agency claims the data, nothing can be derived from it, except for general trends and patterns.