Artificial Intelligence is here to stay. Artificial Intelligence is reaching a large number of sectors, not just computing, to reduce the time needed to perform certain tasks, although, for the moment, it is not always the best option since they are not perfect. In recent months, AIs have become popular among the general public to generate texts and images, the latter being the one that offers us the most spectacular results if we know how.

Most of the Artificial Intelligences available both for free and for a fee to generate images function in much the same way. We just have to enter a description of the image that we want to be generated. However, the most advanced ones, such as Midjourney, with the release of version 5, have not only substantially improved the possibilities when it comes to generating any type of image, but also offer the possibility of using natural language in the description. of the image we want to generate.

Better AIs to generate images

midjourney, as we have commented in the previous paragraph, is currently the most complete AI and now offers the best results for creating any type of image based on a description, however, as it is a paid tool, unless Unless we are willing to pay, it is not an option. The same goes for DALL-E of OpenAI, another interesting option that also forces us to checkout if we want to generate images.

Another option, this time totally free, is Stable Diffusion, a software that we can install on our PC or use via web the same as Midjourney or DALL-E and that do not have any type of limitation of use. We can also use the platforms Lexicawhich allows us to create a limited number of images for free.

Texts to generate images with AI

Regardless of which AI we are going to use to generate images, if we want to obtain the best results that the platform is capable of offering us, we must offer as much information as possible in the sections that we show you below.

Although most of the AIs to generate images allow us to enter the texts in Spanish, since it is responsible for translating them into English to interpret them correctly, it is advisable to use Shakespeare’s language to avoid losing details or misinterpreting any details during the translation. word.

Reason

The first thing that we must enter in the description of the image that we are going to create is whether it is a person, animal, object or reason (worth the redundancy) so that the corresponding AI knows where to shoot. In addition, we must also establish how we want it to be shown, with a certain type of clothing, with a sword in the hands, with a background mist in the case of being an image.

With the description: “3D render of a cute tropical fish in an aquarium on a dark blue background, digital art” we get the following image.

Background

When it comes to people, animals or objects, if we want the image to have a specific background, we must establish where we want it to appear, be it a forest, an enchanted city, a cliff, among others. If we don’t want to complicate our heads too much, we can establish that the background is a certain color with a gradient or a flat color without modifications.

Using the description: “A photo of a teddy bear on a skateboard in Times Square” an AI can generate this image.

Design

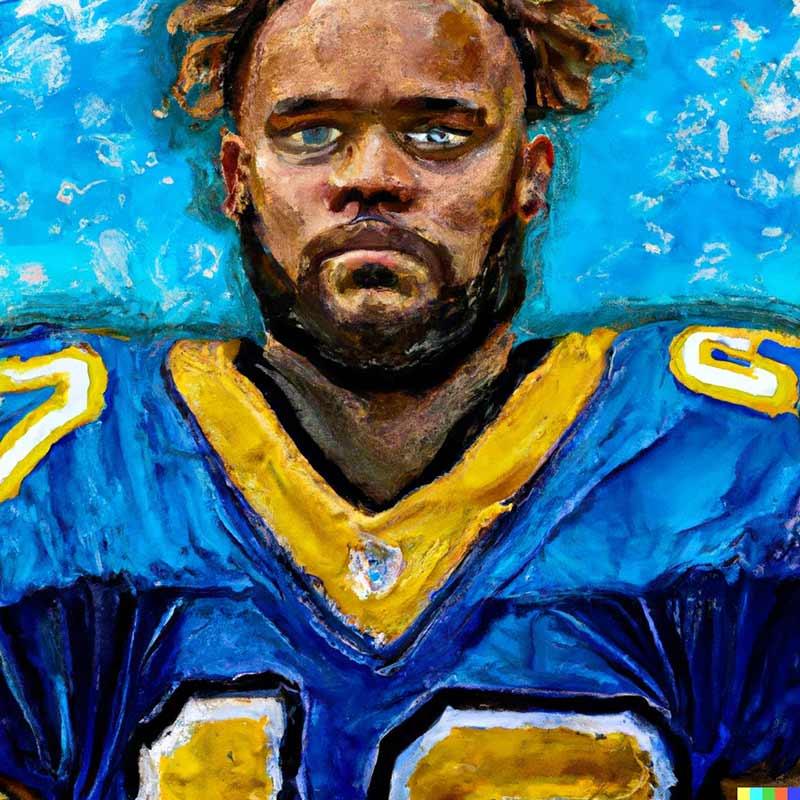

If, in addition, we want the image to have a characteristic design, be it a designer, painter, architectural style or any other, we must establish it in the description. If we like the designs of HRGiger, Van Gogh, Picasso or Dalí, we can guide the AI so that the result is similar to a creation by one of these artists.

Through this command: “A van Gogh style painting of an American football player” we will obtain a result similar to this.

particularities

Another aspect that we must take into account when using an AI to generate images is to establish whether we want the object, person or animal shown to have certain features on the body, face or surface, to have some clothing accessory among others.

With the description: «A Shiba Inu dog wearing a beret and black turtleneck» we will get images that the following.

The images that we have used to show the results that are obtained through a description, we have extracted from DALL-E.