Microsoft’s Artificial Intelligence researchers have made a rather childish mistake in which they have ended up exposing 38 TB of confidential information including machine learning models for training, passwords, backup copies, private messages, etc.

We know that training new Artificial Intelligence models requires massive amounts of training data, but we had never been able to witness firsthand how all this and much more confidential data was exposed in such an absurd way on the Internet within reach of everyone. world.

Massive leak in Microsoft AI

Microsoft’s AI research team, while publishing an open source training and training data set on GitHub, accidentally exposed 38 terabytes of additional private data, including a disk backup of two employees’ workstations . The backup includes secret information, private keys, passwords, and more than 30,000 internal Microsoft Teams messages.

The issue arose when researchers shared their files using an Azure feature called Shared Access Signature (SAS) tokens, which allows data to be shared from Azure Storage accounts. The access level can be limited to specific files only; However, in this case, the link was configured to share the entire storage account, including another 38 TB of private files.

This case is an example of the new risks organizations face when they begin to harness the power of AI more broadly, as more engineers now work with massive amounts of training data. As data scientists and engineers race to bring new AI-based solutions to production, the enormous amounts of data they handle require additional controls and security safeguards.

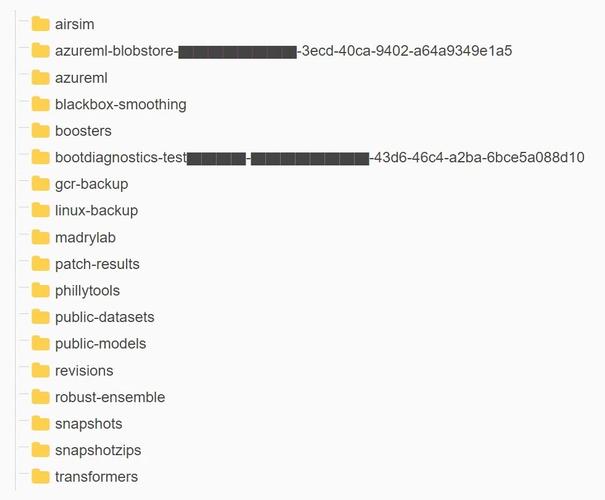

It was the Wiz research team who found the Microsoft-owned Github repository called robust-models-transfer. The repository belongs to Microsoft’s AI research division and its purpose is to provide open source code and AI models for image recognition. It also included machine learning models belonging to a research article from 2020 titled “Do Adversarially Robust ImageNet Models Transfer Better?”

Repository readers were instructed to download the models from an Azure Storage URL. However, this URL allowed access to more than just open source models. It was configured to grant permissions on the entire storage account, mistakenly exposing additional private data, including Microsoft service passwords, secret keys, and more than 30,000 internal Microsoft Teams messages from 359 Microsoft employees.

The leak has already been solved

Microsoft has announced that it has already taken steps to correct the egregious security error. The repository named “robust-models-transfer” is no longer accessible. Until access was canceled, as if that were not enough for anyone to take a look at that confidential data, they were not even put in “read-only” mode.

In addition to the overly permissive access scope, the token was also misconfigured to allow “full control” permissions instead of read-only. That is, an attacker could not only see all the files in the storage account, but could also delete and overwrite existing files.

That is, an attacker could have injected malicious code into all AI models in this storage account, and all users who trust Microsoft’s GitHub repository would have been infected by it.